Running Ollama on Ubuntu with an Unsupported AMD GPU: A Performance Guide

Tools like Ollama make running large language models (LLMs) locally easier than ever, but some configurations can pose unexpected challenges, especially on Linux. Without proper GPU utilisation, even powerful graphics cards like my AMD RX 6700XT can result in frustratingly slow performance. In this post, I'll walk you through how I overcame compatibility issues on Ubuntu to unlock the full potential of my GPU for models like Llama and Deepseek.

Running LLMs locally

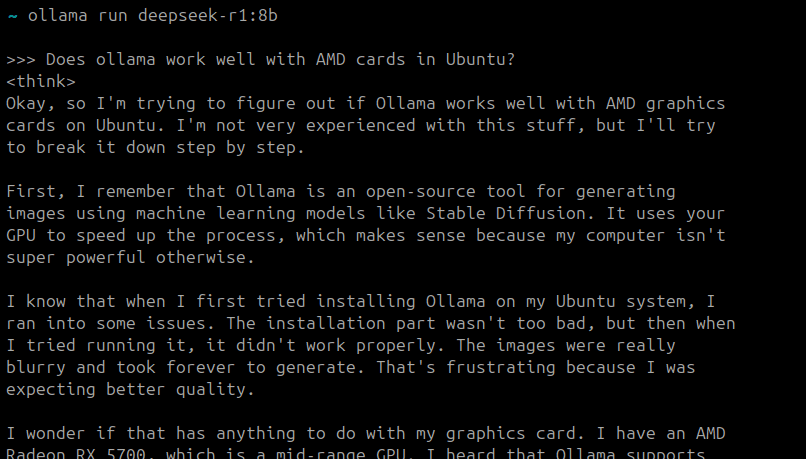

Ollama is the simplest way to get up and running quickly. Installers are available for Windows, Mac, and Linux. Once it is installed, downloading and running a model is as simple as ollama run deepseek-r1:8b. This will provide a CLI-based interface to the model:

As a general rule of thumb, downloading a model 1gb less than available RAM should hit the sweet spot of maximum power while still being usable on the system. Generally speaking, having a strong video card is recommended, as the VRAM on the card will greatly speed up the work of the model.

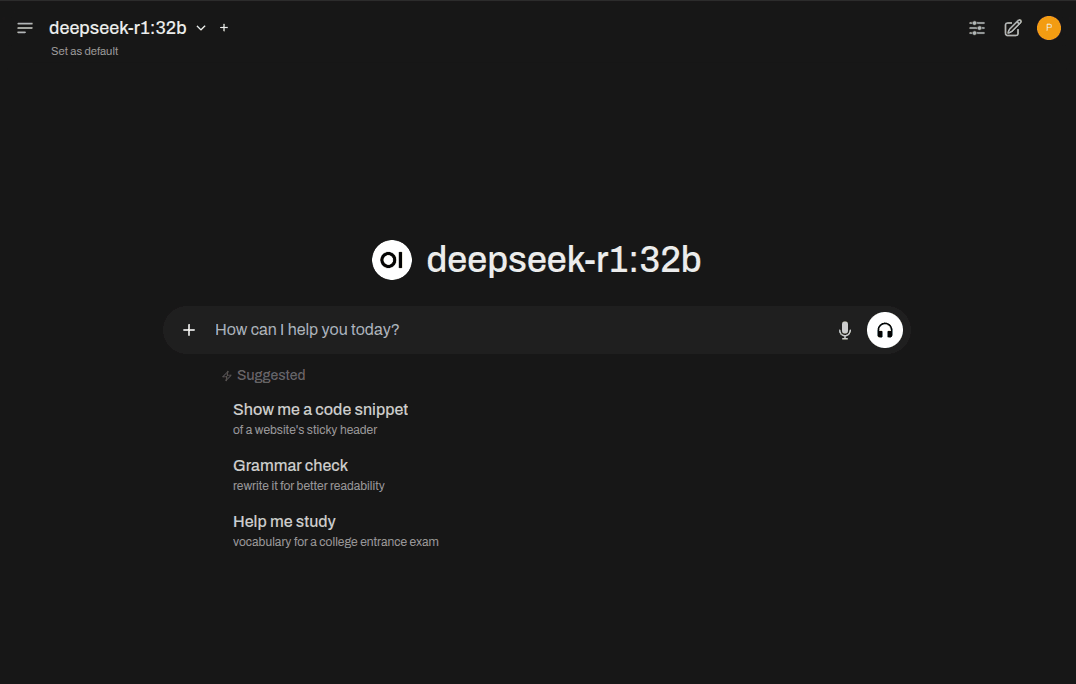

If you're used to the more visual interface of ChatGPT, then installing Open WebUI will allow you to connect to models stored locally in Ollama, in an interface you're familiar with.

While setting up Ollama is straight-forward, optimising performance - especially with AMD GPUs - may involve jumping through a couple of additional hoops.

Why Your AMD GPU Might Be Slowing You Down

After setting up Ollama on my Ubuntu 24 machine, the first thing that struck me was how slow it was. I was using Deepseek, which notoriously generates fewer tokens per second than competing models, but even then, it felt inordinately slow. My AMD RX 6700XT has caused its share of configuration headaches over the years, so I suspected its VRAM wasn’t being fully utilised. This would put all of the load of running the model on the slower system RAM, making the responses slow to a crawl.

Checking VRAM usage

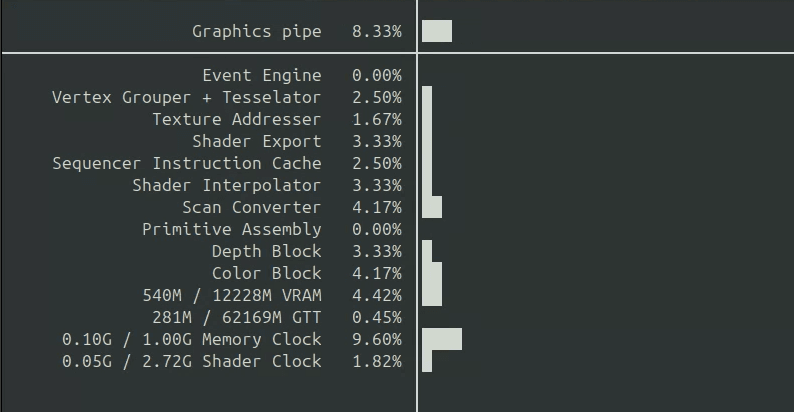

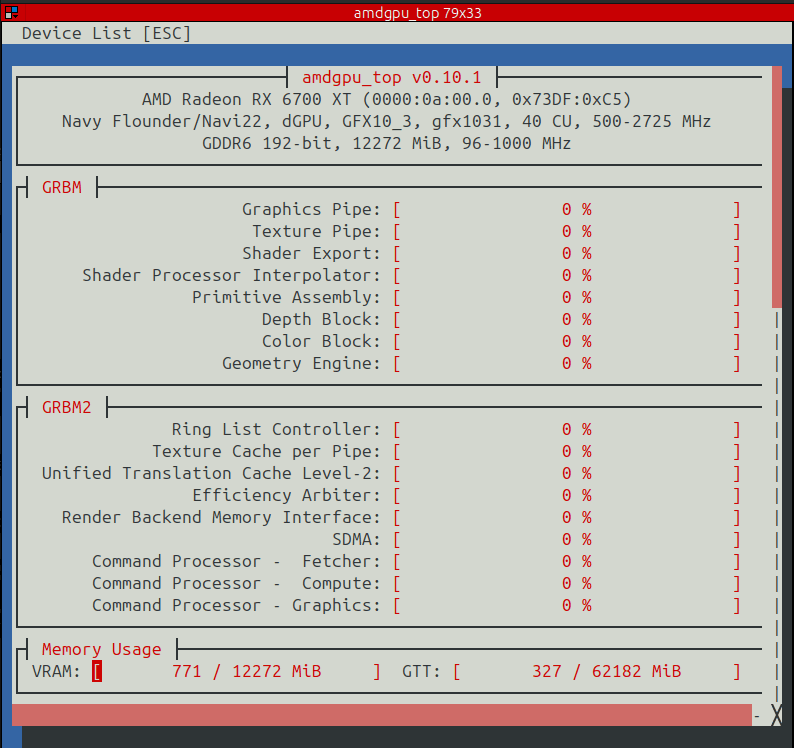

Within Ubuntu, Radeontop is a handy tool to visualise whether a graphics card is being utilised or not.

apt install radeontop

With this open in one shell, I tabbed into the Open WebUI interface, entered a query, and waited. There was very little movement in the radeontop stats. With radeontop open, I'm watching for VRAM usage changes when running a query. If it remains static, the GPU isn't being used. Suspiciously, Ollama seemed to be ignoring the AMD card.

Checking the system journal for all ollama message since last boot revealed a compatability problem with my card:

level=WARN source=amd_linux.go:378 msg="amdgpu is not supported (supported types:[gfx1030 gfx1100 gfx1101 gfx1102 gfx900 gfx906 gfx908 gfx90a gfx940 gfx941 gfx942])" gpu_type=gfx1031 gpu=0 library=/usr/local/lib/ollama

level=WARN source=amd_linux.go:385 msg="See https://github.com/ollama/ollama/blob/main/docs/gpu.md#overrides for HSA_OVERRIDE_GFX_VERSION usage"

level=INFO source=amd_linux.go:404 msg="no compatible amdgpu devices detected"

Messages like amdgpu is not supported and no compatible amdgpu devices detected are never fun to see, and mean I could be in for a long night. These confirmed that Ollama wasn’t using my GPU. This meant the model was falling back to system RAM, drastically reducing performance.

Optimising Ollama's Performance on Ubuntu

Ollama technically supports AMD GPUs, though has a limited list of supported cards. However, as any long-time Linux user will know "not supported" doesn't always mean "won't work"! The original error message mentioned potential overrides. This, in combination with a very helpful thread on the project's issue tracker pointed me to the right solution to force Ollama to support my card, by editing the ollama.service file.

On Ubuntu, the service config file is likely to be somewhere like /etc/systemd/system/ollama.service. It's got a fairly standard config layout:

[Unit]

Description=Ollama Service

After=network-online.target

[Service]

ExecStart=/usr/local/bin/ollama serve

User=ollama

Group=ollama

Restart=always

RestartSec=3

Environment="PATH=/home/conroyp/miniconda3/...

[Install]

WantedBy=default.target

The key line is the Environment="PATH..., which has to be swapped out for Environment="HSA_OVERRIDE_GFX_VERSION=10.3.0". After saving and exiting the config editor, the Ollama service needs to be restarted:

sudo systemctl daemon-reload && sudo systemctl restart ollama.service

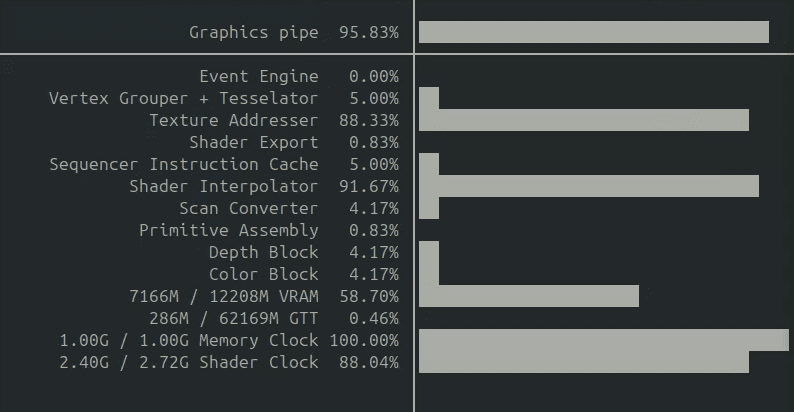

Tabbing back to the Open WebUI interface and running a similar query to earlier, radeontop now lights up with activity! The response times are much quicker, with the VRAM being actively used.

Checking the system journal again, I can see that the version file is still missing, but now we've got skipping rocm gfx compatibility check, and the speed is noticeably faster!

level=WARN source=amd_linux.go:61 msg="ollama recommends running the https://www.amd.com/en/support/linux-drivers" error="amdgpu version file missing: /sys/module/amdgpu/version stat /sys/module/amdgpu/version: no such file or directory"

level=INFO source=amd_linux.go:391 msg="skipping rocm gfx compatibility check" HSA_OVERRIDE_GFX_VERSION=10.3.0

level=INFO source=types.go:131 msg="inference compute" id=0 library=rocm variant="" compute=gfx1031 driver=0.0 name=1002:73df total="12.0 GiB" available="608.7 MiB"

What does HSA_OVERRIDE_GFX_VERSION=10.3.0 do exactly?

On Linux, Ollama uses the AMD ROCm library, which does not support all AMD GPUs. By setting the HSA_OVERRIDE_GFX_VERSION field, we can override the environment to run against the nearest supported llvm. The HSA_OVERRIDE_GFX_VERSION field effectively bypasses ROCm’s hardware compatibility checks, allowing unsupported GPUs to function as if they were a supported model. This is how we enable GPU acceleration on AMD cards which are not officially supported, but can still do the job. In my case 10.30.0 did the trick!

Bonus - better visuals!

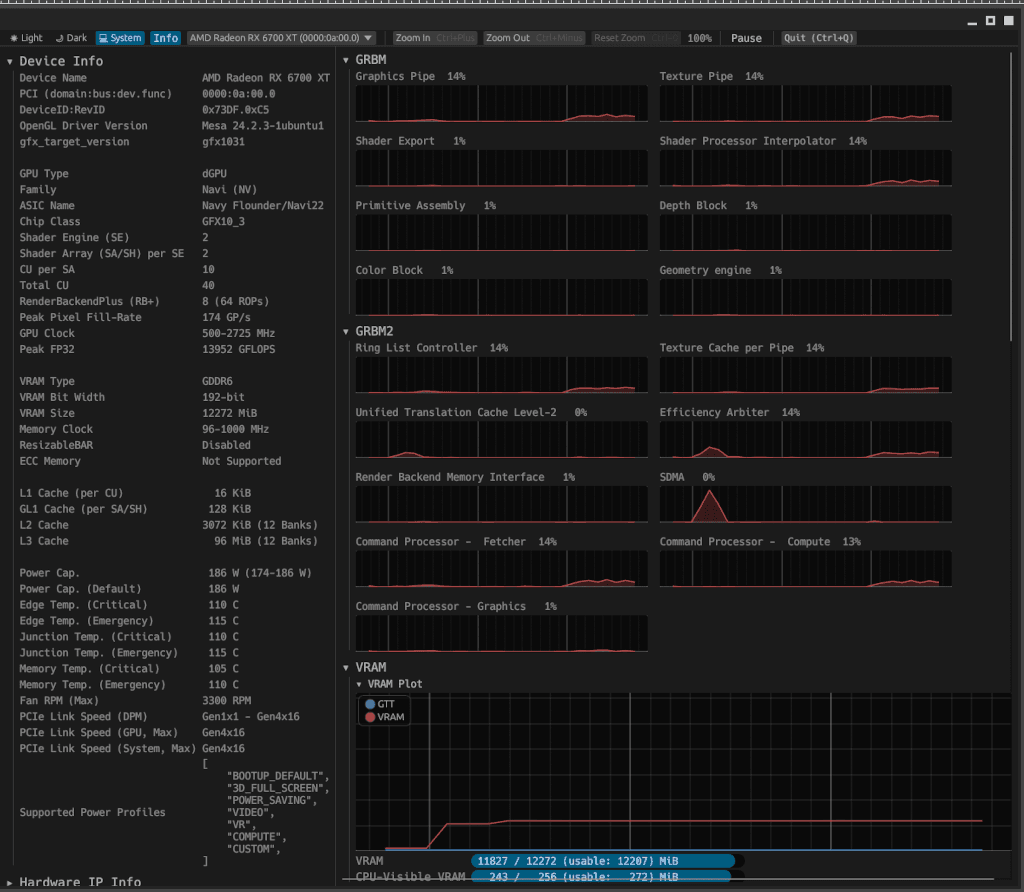

Radeontop works well, though hasn't had any commit activity recently. I found a post on Casey Primozic's blog about amdgpu_top. It's a rust-based tool, which has both standard mode and a more visual GUI mode.

apt install cargo

cargo install amdgpu_top

amdgpu_top --gui

With a simple configuration tweak, I was able to get my AMD GPU back to work, drastically improving response times in Ollama. If you're experiencing similar challenges, don't let "unsupported" scare you off - give this solution a try and let me know how it works for you!

PHP UK Conference, London 2026

In February 2026, I'll be speaking at the PHP UK Conference in London. I'll be telling the story behind EverythingIsShowbiz.com, a site that went from a vibe-coded side project, to a useful experiment in integration of AI into PHP workflows.

Get your ticket now and I'll see you there!