Will valid markup now help your Google ranking?

Google recently published a list of 30 updates made to search over the tail end of 2011. Tucked away in the middle of the list is mention of improved support for rich snippets. The snippet types listed are shopping, recipes and reviews. Amongst the other information on rich snippets is listed a point that a snippet can only be given for a page with a single item upon it, ruling out snippets for things like searches for ipods on amazon.

However it looks like google has also been testing out ways of extracting structured data from pages which have no defined snippet and contain multiple items – starting with real estate classifieds. What’s equally interesting here is that it seems having clear and valid markup may have an extra impact on your site’s appearance in the Google results and number of visitors clicking through!

What’s a rich snippet?

Traditionally “snippets” were the information Google would display about a page when it showed up for a given search term. This would include the page title, a link and some short text from the page.

“Rich Snippets” were introduced first by Google in 2009. These were small bits of additional markup which a webmaster can place on their site in order to give search engines a more structured view of what information is on the page. This in turn allows the search engine to show more relevant information in the “snippets” a user sees when searching.

Google regularly uses recipes as an example of how best rich snippets can be used.

There is along and detailed list of item types which have rich snippet support at schema.org.

What about sites which don’t have a relevant rich snippet?

The vast majority of sites on the net either don’t have content which fits in to the supported rich snippet categories, or haven’t implemented support for them. So, whilst data defined using rich snippets is helpful to Google, they need a way to get similarly structured data from sites without rich snippets defined.

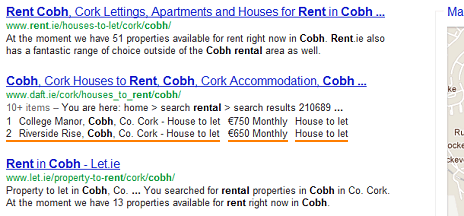

In my day job, I noticed that searches on which our classified sites daft.ie, rent.ie and let.ie typically perform well were starting to show the snippets formatted in a slightly-changed format, despite no changes being made to the markup on site. Trying a handful of slightly different searches also showed significant changes in the snippet being shown – again, with no markup change – which seems to suggest that Google are actively working on their algorithms in this area.

Compare and contrast

Starting with a search for Mallow rental, the result from daft.ie shows the search result page for rental properties in Mallow, Co. Cork. So far, so what?

What’s interesting here is that Google has broken the markup out in to the address/type and price sections, making it very clear how much the top properties are for any user performing the same search. This is without any changes being made to the markup on the daft site.

On the same set of search results, the results from let.ie are similarly displayed. Yet property.ie, which has very similar markup, is not broken out. So it looks like the googlebot is slowly working it’s way through various sites. However, further queries on daft reveal that the algorithm has been tweaked since the Mallow results above.

In the daft.ie result above, the property type now appears to have been broken out in to a separate section on each line (red lines added are mine). Again, no changes to markup have been made, but the googlebot appears to realise that the “-” between the address and “House to let” is a logical break between two important, connected data points which refer to separate elements of the same property.

So what exactly is happening here?

In summary, it looks like google has been aggressively testing ways to derive structured data from nominally free-form webpages. This type of behaviour has been spotted in the past, when Google snippets showed a list of results from a page which itself was a formatted list. However, to the best of my knowledge this automated breaking out of key data is a new tweak.

It would also seem to suggest that having well-structured, clear markup is going to be increasingly-important in future. Google has historically downplayed the importance of having markup which validates, saying that it has no significant impact in a site’s ranking. However, the sites listed above which have seen these new type of results are ones which are XHTML1 strict compatible. There’s no way of knowing exactly what’s going on inside the googlebot, but it stands to reason that having clear markup which is simple to parse consistently makes life easier for this sort of information (relative importance of data) to be extracted.

Google has regularly espoused the benefit on click-through rates of having rich snippets enabled, so if this sort of pseudo-rich snippet has a similar effect, having clear and consistent markup on your site may have a direct impact on visitor numbers.

If you’ve any other examples of this type of intelligent formatting or theories on what’s going on above, please share them in the comments below!

PHP UK Conference, London 2026

In February 2026, I'll be speaking at the PHP UK Conference in London. I'll be telling the story behind EverythingIsShowbiz.com, a site that went from a vibe-coded side project, to a useful experiment in integration of AI into PHP workflows.

Get your ticket now and I'll see you there!